Human memory is limited, and selective. That’s why we do not remember quite relevant facts in our past which will be very useful to properly understand, question or challenge the situations we face in our present, and prepare for the things to come in the future.

Artificial Intelligence has been there… for decades. It is nothing new, nothing transgressive, but applications and the so-called democratized access to technology has created the last but one great disruption of our lifetime (I can count dozens of these disruptions in the past years). But, is it so important, so good for humanity, so cornerstone of progress? Well, let’s look into it.

‘Technology’ derived from the Greek word ‘Technologia’ (Τεχνολογία) means systematic treatment of practical arts and/or applied science. It also means the system by which a society provides its members with those things needed or desired.

Almost instant adoption

OpenAI (ChatGPT) took 5 days to reach 1 million registered users. Netflix, then the main technology disruptor with its uncontested capacity for content streaming, needed 3,5 years to get there. Twitter, another standart of technology democratization and social interaction digitalization, spent about 2 years to reach the first million users, and so on. Millions of users in a few weeks, that sounds like a total revolution or a master marketing strategy. But nobody has thought quietly, but abruptly got into the website and started using and getting mesmerized by the apparently advanced capacity of ChatGPT to understand and communicate with humans.

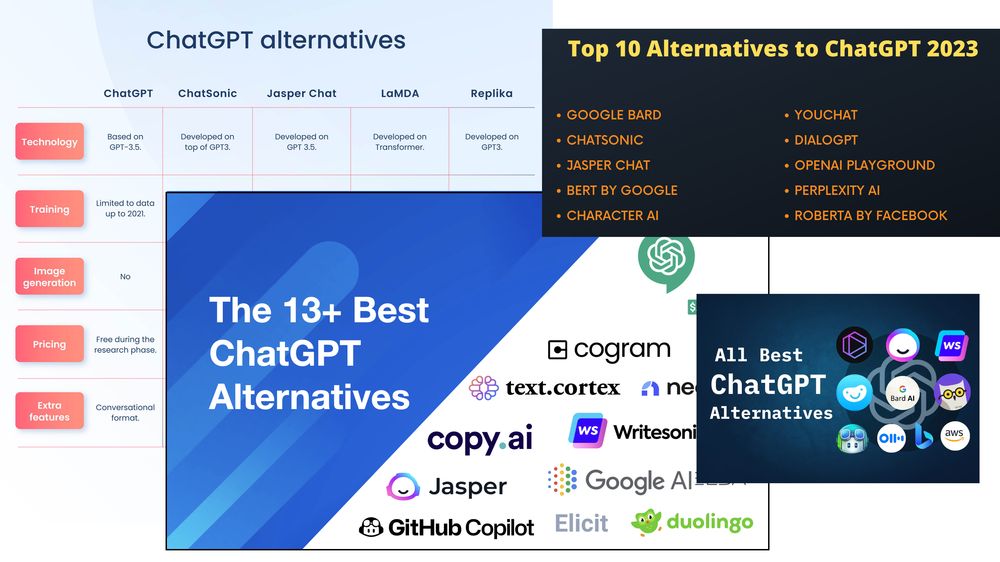

Suddenly, multiple solutions for AI have been appearing in the last month, reclaiming its own sovereignty in specific areas, like an AI for education, another for academic research, image and video generation, and a bunch of them for development and coding (i.e. hashnode.com). Rising awareness of the bias of ChatGPT and the lack of accuracy in specific areas of contents. Because AI is based on something programmed, then trained and improved, but lastly designed by humans with its defects, flows and biases. But marketing and communication, social media and advertisement, helped with the required economic interest of these platforms to make money out of anyone blinded by the ultimate technology leap. Make the story so appealing, that nobody will say “nah, I pass.”

Side effects

It is a revolution. Well, revolutions are drastic changes and these are not always positive: the fact of idealizing revolutions is part of the human desire for change, but in the pursuit of a better life the side effects have to be taken into account seriously. Memory of past revolutions can teach us the downsides of beheading people in the main square, eliminating social differences, sudden change of packaging material, or to settle for farming instead of hunting. Usually, rapid changes bring issues (in Chinese revolution, 革命, means literally “taking lives”), and this “technological revolution” is not an exception.

Ok, maybe I am too dramatic or just a negationist of the new science, but there are some signs of the issues to come due to these apparently godlike tools. For over a decade we all have been hearing the fact of having the most well educated and prepared generations, born digital, ready to expand the wellbeing of humanity to the next level. And maybe it is so. But what if the constant relaxation of education requirements, the facilitation of information access, the almost extinction of manual writing (and its beneficial effect for memory improvement) and the ultimate sense of not needing to know anything in detail because AI will provide, will make us less capable? More dependant of tech for basic thinking and reasoning, than our previous generations?

Time will tell. As you already have thousands of articles and news exalting the virtues of this new era of comfort and prosperity, I’ll recommend you to check on these few thoughts:

- Online tests suggest IQ scores in US dropped for the first time in nearly a century

- Australia and Finland slide further in PISA 2018

- Study shows stronger brain activity after writing on paper than on tablet or smartphone

- Idiocracy